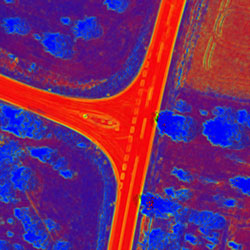

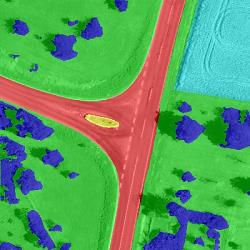

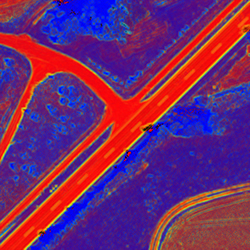

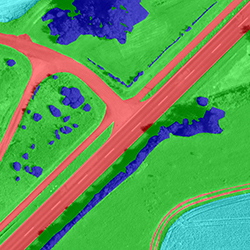

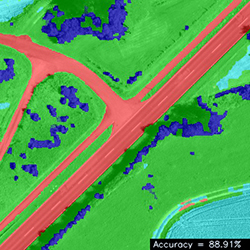

In this demo, we consider the case when the training data is aviable. In this example the trainig data is represented in form of manually labelled images. The original images Original Image.jpg are color-infrared images, and the grounftruth images GroundTruth Image.jpg have 6 different classes, namely road, traffic island, grass, agriculture, tree and car (instances of which are not represented in image).

#include "DGM.h"

#include "VIS.h"

#include "DGM\timer.h"

int main(int argc, char *argv[])

{

const Size imgSize = Size(400, 400);

const int width = imgSize.width;

const int height = imgSize.height;

const byte nStates = 6;

const word nFeatures = 3;

if (argc != 9) {

print_help(argv[0]);

return 0;

}

int nodeModel = atoi(argv[1]);

int edgeModel = atoi(argv[2]);

Mat train_fv = imread(argv[3], 1); resize(train_fv, train_fv, imgSize, 0, 0, INTER_LANCZOS4);

Mat train_gt = imread(argv[4], 0); resize(train_gt, train_gt, imgSize, 0, 0, INTER_NEAREST);

Mat test_fv = imread(argv[5], 1); resize(test_fv, test_fv, imgSize, 0, 0, INTER_LANCZOS4);

Mat test_gt = imread(argv[6], 0); resize(test_gt, test_gt, imgSize, 0, 0, INTER_NEAREST);

Mat test_img = imread(argv[7], 1); resize(test_img, test_img, imgSize, 0, 0, INTER_LANCZOS4);

vec_float_t vParams = {100, 0.01f};

if (edgeModel <= 1 || edgeModel == 4) vParams.pop_back();

if (edgeModel == 0) vParams[0] = 1;

else edgeModel--;

graphExt.buildGraph(imgSize);

nodeTrainer->addFeatureVecs(train_fv, train_gt);

Mat featureVector1(nFeatures, 1, CV_8UC1);

Mat featureVector2(nFeatures, 1, CV_8UC1);

for (int y = 1; y < height; y++) {

byte *pFv1 = train_fv.ptr<byte>(y);

byte *pFv2 = train_fv.ptr<byte>(y - 1);

byte *pGt1 = train_gt.ptr<byte>(y);

byte *pGt2 = train_gt.ptr<byte>(y - 1);

for (int x = 1; x < width; x++) {

for (word f = 0; f < nFeatures; f++) featureVector1.at<byte>(f, 0) = pFv1[nFeatures * x + f];

for (word f = 0; f < nFeatures; f++) featureVector2.at<byte>(f, 0) = pFv1[nFeatures * (x - 1) + f];

edgeTrainer->addFeatureVecs(featureVector1, pGt1[x], featureVector2, pGt1[x-1]);

edgeTrainer->addFeatureVecs(featureVector2, pGt1[x-1], featureVector1, pGt1[x]);

for (word f = 0; f < nFeatures; f++) featureVector2.at<byte>(f, 0) = pFv2[nFeatures * x + f];

edgeTrainer->addFeatureVecs(featureVector1, pGt1[x], featureVector2, pGt2[x]);

edgeTrainer->addFeatureVecs(featureVector2, pGt2[x], featureVector1, pGt1[x]);

}

}

nodeTrainer->train();

edgeTrainer->train();

Mat nodePotentials = nodeTrainer->getNodePotentials(test_fv);

graphExt.setGraph(nodePotentials);

graphExt.fillEdges(*edgeTrainer, test_fv, vParams);

vec_byte_t optimalDecoding = decoder.decode(100);

Mat solution(imgSize, CV_8UC1, optimalDecoding.data());

confMat.estimate(test_gt, solution);

char str[255];

sprintf(str, "Accuracy = %.2f%%", confMat.getAccuracy());

printf("%s\n", str);

marker.markClasses(test_img, solution);

rectangle(test_img, Point(width - 160, height- 18), Point(width, height), CV_RGB(0,0,0), -1);

putText(test_img, str, Point(width - 155, height - 5), cv::HersheyFonts::FONT_HERSHEY_SIMPLEX, 0.45, CV_RGB(225, 240, 255), 1, cv::LineTypes::LINE_AA);

imwrite(argv[8], test_img);

imshow("Image", test_img);

waitKey(1000);

return 0;

}