|

Direct Graphical Models

v.1.7.0

|

|

Direct Graphical Models

v.1.7.0

|

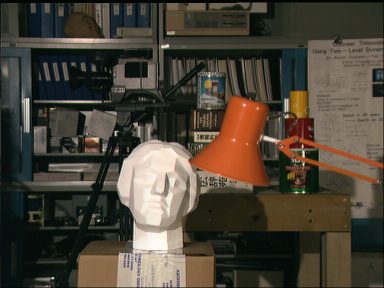

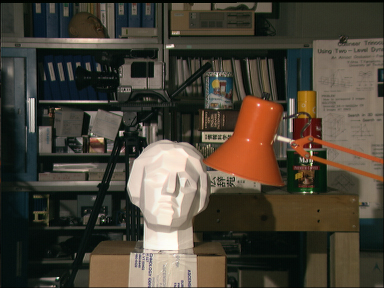

Estimating the disparity field between two stereo images is a common task in computer vision, e.g., to determine a dense depth map. Please refer to the Chapter 1 of the Master Thesis 3D Map Reconstruction with Variational Methods for introduction to disparity field estimation. Evaluation and qualitative comparison of a large number of different algorithms for disparity field estimation may be found at vision.middlebury.edu web-site. In this tutorial we show how to develop a probabilistic model for evaluation a high-quality disparity field between two stereo images.

|

|

|

|

We start this tutotial in the same way as Demo Train or Demo Dense tutorials: with reading the command line arguments and initializing basic DGM classes. Our primary input data here is the couple of stereo images: imgL and imgR. We also represent disparity as integer shift value in pixels: the distance in x-coordinate-direction between the same pixel in left and right images. Every possible diparity value between given minDisparity and maxDisparity is the class label (state) with its own probability.

Please note, that in this tutorial we use pairwise graphical model with edges connection every node with its four direct neighbors. You can easily change to complete (dense) graphical model

by changing the factory DirectGraphicalModels::CGraphPairwiseKit to DirectGraphicalModels::CGraphDenseKit. The optimal parameters for the dense edge model may be optained using Demo Parameters Estimation.

Next we build a 2D graph grid and add a default edge model:

The most tricky part of this tutorial is to fill the graph nodes with potentials. We do not train any node potentials model, but estimate the potentials directly from the images using the formula: \( p(disp) = 1 - \frac{\left|imgL(x, y) - imgR(x + disp, y)\right|}{255} \), where \( disp \in \left[minDisp; maxDisp \right) \). This will give the highest potentials for those dosparities where the pixel values in left and right images nearly the same.

Now to improve the result of stereo estimation we run inference and decoding.

You can check how the results look like without inference. To do so set the number of iterations to zero: i.e. use "decode(0)". This will be the resulting disparity field achieved

without application of the CRFs.

And with some more efforts we convert the decoding results into a disparity field: